In February 2016, Google (YouTube’s parent company) announced plans to divert its users away from extremist content towards counter-narratives meant to challenge the appeal of extremist material.

As one senior executive for Google told British MPs: "We should get the bad stuff down... This year... we are running two pilot programmes. One is to make sure that these [counter narrative] types of videos are more discoverable on YouTube. The other one is to make sure when people put potentially damaging search terms into our search engine... they also find this counter narrative." The announcement was covered in major British outlets, including the Guardian, Telegraph, and Daily Mail, as well as U.S. outlet NBC News.

Despite the positive press the announcement generated, Google continues to host extremist and terrorist content on its platform. In March 2017, more than 250 companies froze or partially froze their advertising accounts with Google after discovering that their ads were appearing next to extremist, hateful, and terrorist content on YouTube. According to reports, the revenue loss to Google—YouTube’s parent company—amounted to up to $750 million.

Although Google pledged to do more to bolster "brand safety," the company has continued to host violent and extremist materials. After the March 2017 Westminster attack, YouTube was "inundated with violent ISIS recruitment videos," according to findings by the U.K. government. The British government noted that YouTube failed to block this slew of content, despite the videos being easily searchable and posted under usernames like "Islamic Caliphate."

This content is dangerous, and has at times proved deadly as extremists have been found to self-radicalize by watching extremist content on YouTube, including lectures by extremist preachers Anwar al-Awlaki and Ahmad Musa Jibril. Orlando gunman Omar Mateen was found to have been radicalized in part by watching Awlaki videos online. The 2013 Boston bombers—Tamerlan and Dzhokhar Tsranev—were later found to have downloaded Awlaki’s YouTube videos onto their electronic devices. One of the assailants in the June 3, 2017, London Bridge attack was also reportedly radicalized while watching Jibril’s videos on YouTube.

The Counter Extremism Project has repeatedly called for a serious effort to reduce the extensive availability of extremist and radicalizing content on YouTube. The logical action for YouTube—and other companies controlling private content supporting services—is to remove such extremist content, in the same manner it removes other restricted (but legal) material such as pornography. It is unclear what kind of expertise technology companies have in devising or determining effective counter-narrative resources and content.

Download Full ReportPart 1 of Google’s Program: "Get the bad stuff down":

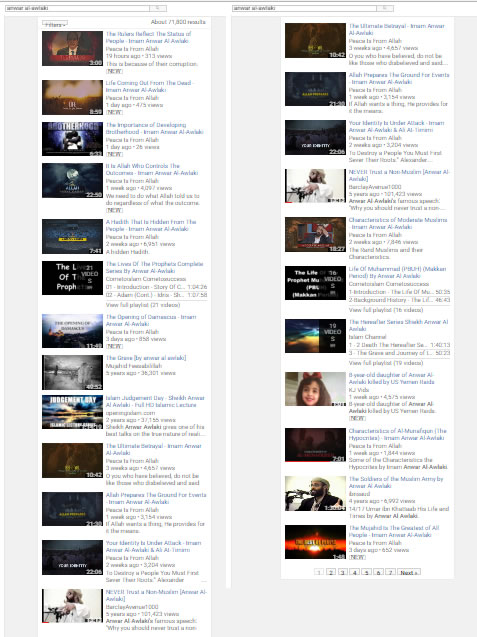

The Counter Extremism Project has repeatedly advocated for YouTube to remove extremist content. Extremist material is currently far too available on YouTube. A search for notorious al-Qaeda recruiter Anwar al-Awlaki on YouTube, for example, yielded 70,100 search results as of August 30, 2017. Many of the results are lectures by Awlaki, urging violent jihad against non-Muslims, and Americans in particular.

The "bad stuff"—including lectures by Anwar al-Awlaki, who has played a role in radicalizing dozens to terrorism—is by no means taken down. The Counter Extremism Project has tracked the availability of Anwar al-Awlaki videos on YouTube from December 2015 through August 2017. In that time, CEP has made the following discovery:

Anwar al-Awlaki videos have been consistently, and even increasingly available on YouTube.

On December 19, 2015, a search for "Anwar al-Awlaki" on YouTube yielded 61,900 results. By August 30, 2017, this number had risen to 70,100. In November 2017, Google acceded to external pressure and pledged to drastically reduce Awlaki’s presence on its YouTube platform. *

Part 2 of Google’s Program: Make counter-narrative "more discoverable" on YouTube:

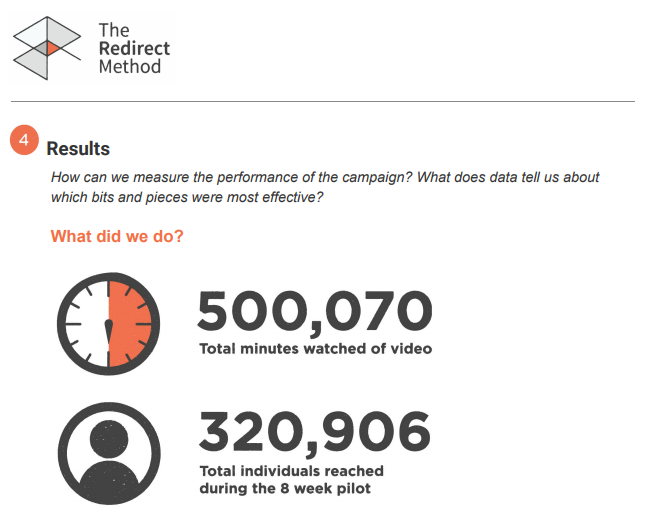

On July 20, 2017, Google formally announced the launch of its Redirect Method on YouTube, a program designed to identify users searching for ISIS-linked content on YouTube and expose them to advertisements and video playlists on YouTube that run counter to ISIS’s narratives. While potentially useful, Google is unable to provide convincing evidence supporting its claims that the Redirect Method actually dissuades potential ISIS supporters.

Instead, Google highlights statistics purported to demonstrate how many YouTube users were "reached" and the amount of "minutes of video" users watched. The foregoing are metrics for measuring the efficacy of digital advertising – not for a counter radicalization program. For example, no information is provided about the individuals who watched the videos or whether or not any of them were, in fact, even on the path to radicalization. Most importantly, these points do not tell us whether watching Google’s videos in fact dissuaded any of the viewers from supporting ISIS or other extremist groups (openly or secretly), or from carrying out acts of violence.

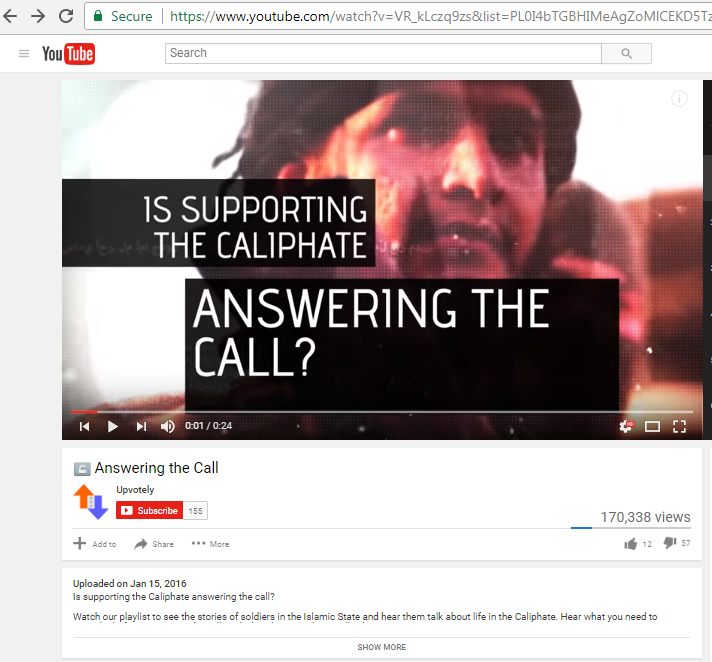

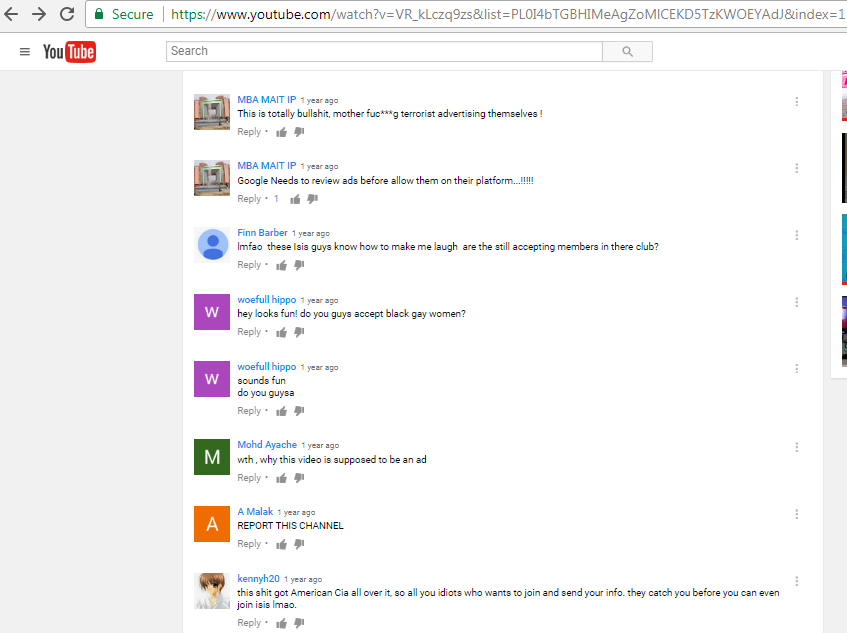

There are serious reasons to doubt that the Redirect program is achieving its intended objective. For example, one counter-narrative playlist designed by Google to undermine ISIS narratives, "Answering the Call," was presented as an example of a successful case study for the Redirect Method. Although the video "reached" more than 100,000 viewers, Google neglects to mention that the video was overwhelmingly unpopular among its viewing audience—receiving 57 dislikes compared to only 12 likes as of July 25, 2017—an interesting data point considering that this video is intended to influence and impact its audience. Of course, "viewership" numbers tell us little about the identity of the viewers and whether or not the individuals were at all influenced or dissuaded by Redirect from engaging in terrorist violence, if any were in fact ISIS sympathizers.

Furthermore, a large portion of commenters on the Redirect video appeared to be people visibly concerned by ISIS content, rather than interested in or motivated by it. Although the commenters were a relatively tiny subset of the video’s total viewership, this may point to a flaw in Google’s advertisement targeting methods. While neither of these data points ensure that the program is entirely ineffective at reaching some members of ISIS’s audience—or potentially even discouraging them somewhat from aligning themselves with ISIS—the paucity of meaningful data from this program is serious cause for concern.

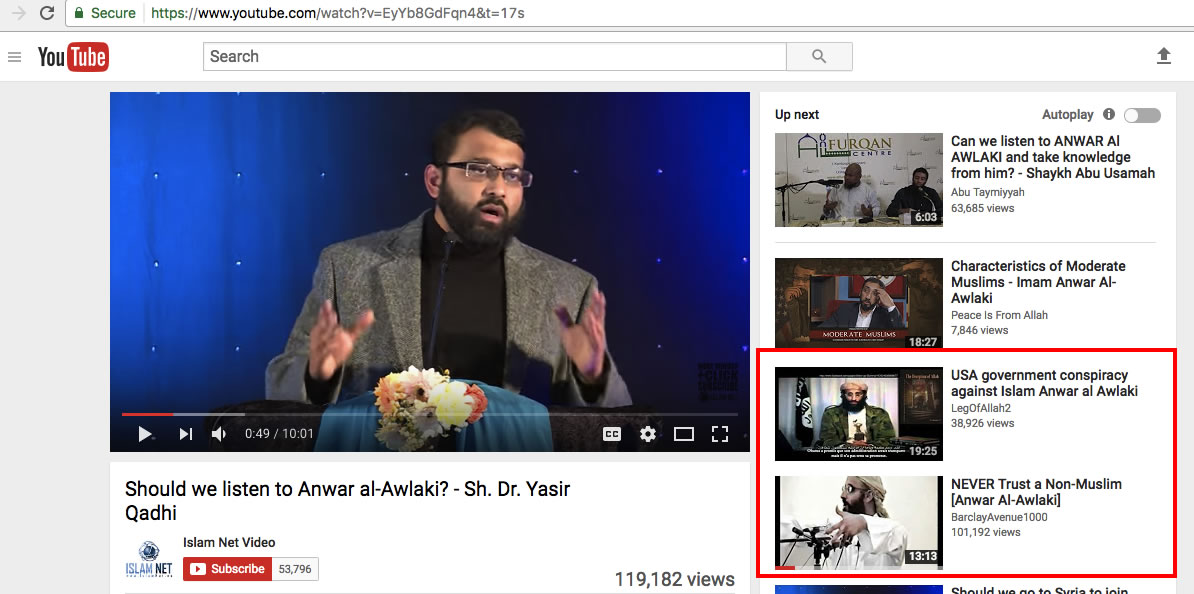

Concerns about the Redirect program aside, the facts remain that counter-narrative content and voices continue to be drowned out by the high volume of extremist material on YouTube that remains readily available. In an analysis conducted in August 2016, the first page of search results for "Anwar al-Awlaki" on YouTube yielded one counter narrative video out of the 18 videos. In subsequent searches conducted in June 2017, few if any counter-narrative videos appeared on the first page of search results. When these videos did appear, they often appeared further down and less prominently than Awlaki’s own speeches.

These findings are disappointing given that authoritative material countering Awlaki is available online. For example, a video by Dr. Yasir Qadhi, provides potentially useful context about Anwar al-Awlaki and the danger of his message. Dr. Qadhi says: "My business is to judge [Anwar al-Awlaki’s] legacy. And his legacy has a lot of good and also a lot of harm and danger. So should you listen to him? If you want to take the good and you’re qualified to only take the good, fine. But be careful that his methodology of jihad, in my humble opinion... It sounds so good, but what good will it do you? ... Our religion does not tell us to die foolish deaths." This video has begun to appear on the first page of YouTube search results, but it did not appear consistently in YouTube’s sidebar while watching Anwar al-Awlaki’s hateful videos.

Part 3 of Google’s Program: Make sure that "when people put potentially damaging search terms into our search engine" they also find "counter narrative":

The Counter Extremism Project has seen mixed evidence on the success of this part of the YouTube program. Although CEP has occasionally seen English-language videos that may be considered counter-narrative, these videos are rare and far between. As of August 2017, a search for "Anwar al-Awlaki" overwhelmingly showcases lectures by Anwar al-Awlaki, including lectures urging Muslims to take up violence.

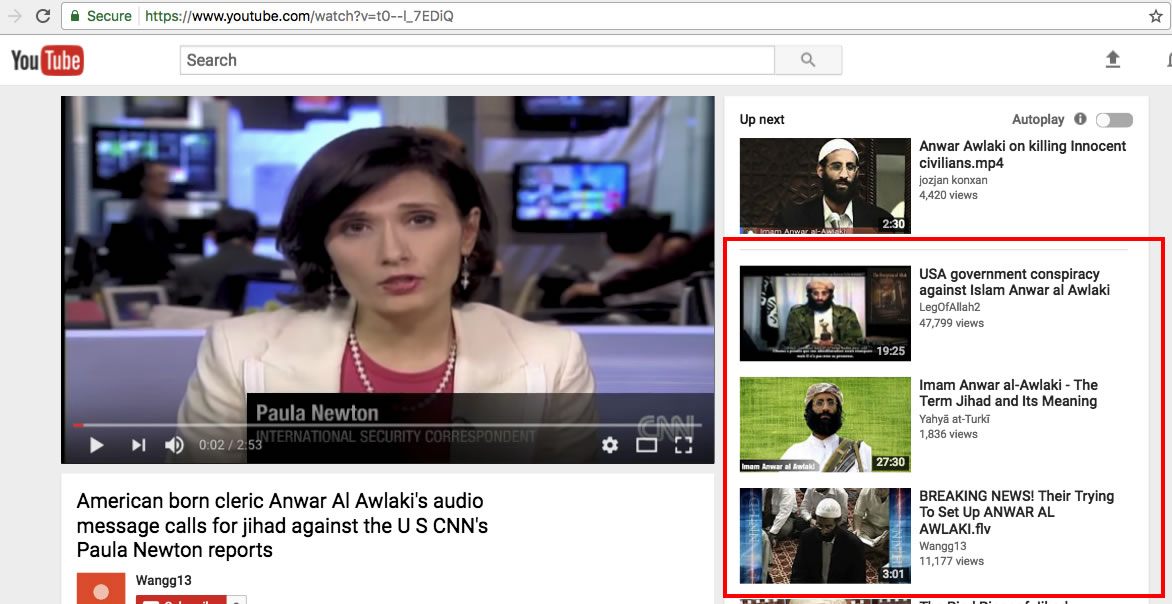

Even a video by CNN covering Awlaki news was flanked by pro-Awlaki lectures and videos.

Anwar al-Awlaki material fills the recommendations sidebar for CNN Video (June 2017)

The autoplay and recommendations section are packed with lectures by Awlaki and pro-Awlaki videos. Counter-narrative videos (offering moderate approaches to Awlaki content) are not readily available or promoted.

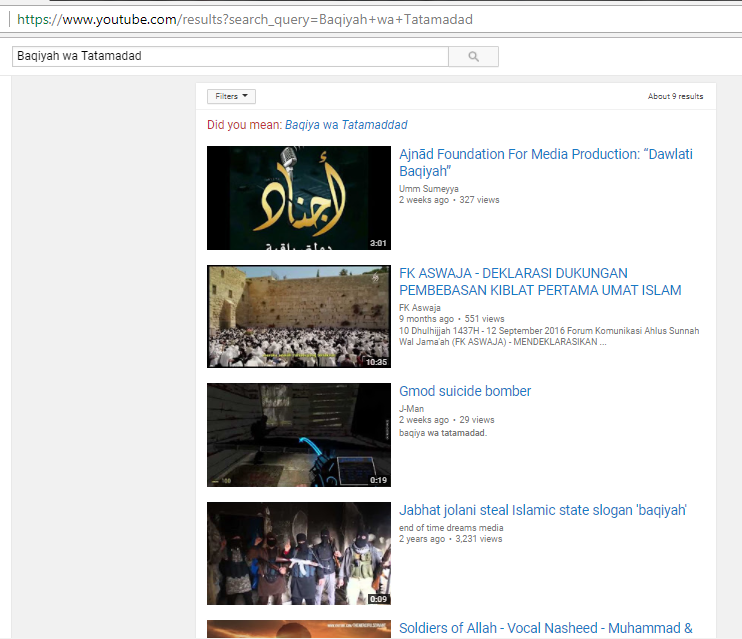

On July 20, 2017, Google formally announced the launch of its Redirect Method program, whereby the company sought to make counter-narrative videos more discoverable for individuals searching for pro-ISIS content. As Google explained, we "focused on terms suggesting positive sentiment towards ISIS, for instance: Supporter slogans (e.g. Baqiyah wa Tatamadad; Remaining & Expanding) [and] deferential terms to describe ISIS (e.g. al-Dawla al-Islamiyya vs. Daesh)..."

On July 24, 2017, CEP conducted an independent round of searches using these same terms to find the counter-narrative content that Google had proposed adding to these types of search terms. CEP was alarmed to discover that an English-language search for pro-ISIS term "Baqiyah wa Tatamadad" contained more extremist content than counter-narratives. Only when CEP tried several alternative spellings of the term was CEP able to yield videos that appeared to counter ISIS’s narratives.

Conclusion

Google has declared its intention to counteract the dangerous role played by extremists on YouTube and has launched its Redirect program, intended to discourage potential ISIS recruits from taking up cause with the terrorist group. However, this program does not have useful forms of monitoring and evaluation in place.

Furthermore, Google’s stated intention to reduce the terrorist recruitment threat on its platform is undermined by Google’s persistence in hosting and promoting content by extremists. In November 2017, Google acceded to external pressure and pledged to drastically reduce Awlaki’s presence on its YouTube platform. * But until Google takes the step of systematically removing all terrorist recruitment videos, extremists will continue to enjoy access to extremist, incendiary, and inciting material on YouTube on the path to self-radicalization and terrorist violence.